September 12, 2017, was the launch day for Hillary Clinton’s autobiographical account of the 2016 election she lost to Donald Trump, definitively entitled ‘What Happened’. By midday 1669 reviews had been written on Amazon.com. By 3pm over half of the reviews, all with 1-star ratings, had been deleted by Amazon and a new review page for the book had been set up. After Day 1, ‘What Happened’ had over 600 reviews and an almost perfect 5 rating. What happened?!

Figure 1: Genuine support or fake reviews? Hillary Clinton’s ‘What Happened’ Amazon rating 1 day after launch (and after all the negative reviews were deleted )

There were good reasons to view the flood of negative reviews as suspicious. Only 20% of the reviews had a verified purchase and the ratio of 5-star to 1-star reviews – 44%-51% – was highly irregular; the vast majority of products reviewed on Amazon.com display an asymmetric bimodal (J-shaped) ratings distribution (see Hu, Pavlou and Zhang, 2009), in which there is a concentration of 4 or 5 star reviews, a number of 1-star reviews and very few 2 or 3 star reviews. The charts in Figure 2 below, originally featured in this QZ article, show the extent to which ‘What Happened’ was initially a ratings and purchase pattern outlier.

Figure 2: Two charts indicating the unusual reviewing behaviour for ‘What Happened’. Source: Ha, 2017

Faced with accusations of pro-Clinton bias as a result of deleting only negative reviews, an Amazon spokesperson confirmed that the company, in taking action against “content manipulation”, looks at indicators such as the ‘burstiness’ of reviews (high rate of reviews in a short time period) and the relevance of the content – but doesn’t delete reviews simply based on their rating or their verified status. (Hijazi, 2007).

It would appear that Amazon have taken on board the academic literature suggesting that burstiness is a feature of review spammers and deceptive reviews (e.g. this excellent paper by Geli Fei, Arjun Mukherjee, Bing Liu et al. ) and that it is right to interpret a rush of consecutive negative reviews close to a book launch as suspicious.

But what about the subsequent burst of 600+ positive reviews? One might expect the Clinton PR machine to mobilize its own ‘positive review brigade’ in anticipation of , or in response to, a negative ‘astroturfing’ campaign against her book. One could even argue that it would be foolish not to manage perceptions of such a controversial and polarising book launch. If positive review spam is identified, should it also be deleted?

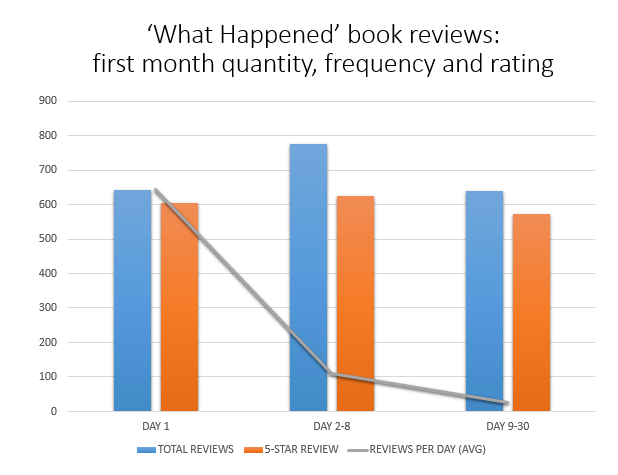

I tracked the number of Amazon reviews of ‘What Happened’ for a month after its launch on the new ‘clean’ book listing (the listings have since been merged but you can see my starting point here). Figure 3 below shows clear signs of ‘burstiness’; the rate of reviewing decreases exponentially over the first month even while the rate of 5-star reviews remained consistently high.

Figure 3: Number and frequency of ‘What Happened’ reviews in the first 30 days following its launch and deletion of negative reviews.

So, it is perfectly legitimate to ask whether the ‘What Happened’ reviews were manipulated through ‘planting’ of ‘fake’ 5 star reviews written for financial gain or otherwise incentivised e.g. in exchange for a free copy of the book, which would circumvent Amazon’s Verified Purchase requirement. With my investigative linguist hat on, I’m wondering if there are any linguistic patterns associated with this irregular – and potentially deceptive – behaviour? (If there are, these could be used to aid deception detection in the absence of – or in tandem with – non-linguistic ‘metadata’.)

A line of fake review detection research has confirmed linguistic differences between authentic and deceptive reviews, although the linguistic deception cues are not consistent and vary depending on the domain and the audience (see my brief overview in this paper). Since we don’t know the deception features in advance and no ground truth has been established (i.e. we don’t know for sure if there was a deception), I’m going to use two unsupervised learning approaches appropriate for unlabeled data: factor analysis, to find the underlying dimensions of linguistic variation in all the reviews, followed by cluster analysis to segment the reviews into text types based on the dimensions with the hope of finding specific deception clusters.

If there is a text cluster that correlates with ‘burstiness’ – i.e. occurs more frequently in the reviews closest to the book launch date and/or occurs repeatedly within a short time frame – then that would suggest there are specific linguistic styles and/or strategies correlated with this deceptive reviewing behaviour. The existence of such a distinct deception cluster would strongly suggest that Clinton’s PR team gamed the Amazon review system (understandably, in order to counter the negative campaign against the book). Alternatively, different reviewing strategies might be distributed randomly across the review corpus and unrelated to its proximity to the book launch date. This would weaken the argument that linguistic variation in the reviews is a potential deception cue. The two scenarios are illustrated in Figure 4 below:

Figure 4: Hypothetical illustration of how review text types (clusters) might be distributed over a 30 day period in the case of astroturfed fake reviews (top) or genuine positive reviews (bottom).

My prediction? Surely, Hillary Clinton’s PR team would not so be so brazen as to solicit fake positive reviews in bulk and in an organised fashion. Yes, there were a disproportionate number of reviews written in the first few days but I believe this was a spontaneous groundswell of genuine support. I do expect there to be a few different types of linguistic review style, reflecting the different ways in which books can be reviewed (e.g. focus on book content; retell personal reading experience; address the reader – these are some of the review styles I presented at the ICAME39 (2018) conference in Tampere). However, if the support is spontaneous I would expect these review styles not to be correlated with burstiness or other deceptive phenomena but to occur randomly throughout the month.

Check back here in a few days for Part #2: Results and Discussion!

[…] the first post of this two-part linguistic investigation, we set up an unsupervised analytical approach; factor […]

LikeLike